A while ago, I had a very brief chat with Mac Gerdts (the author of Concordia) about whether or not someone had approached him to do Concordia for iOS. It was an interesting discussion and he thought that getting the AI right would be trickiest part. My 2 cents where that – in most cases – doing board games on iOS is unfortunately not a (financially) viable enterprise.

… Read MoreBlog

News, Updates and General Ramblings

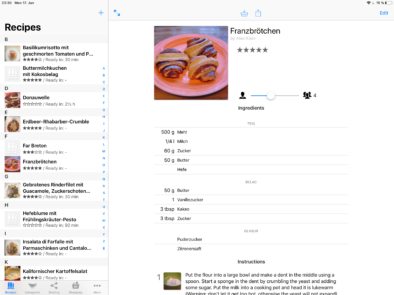

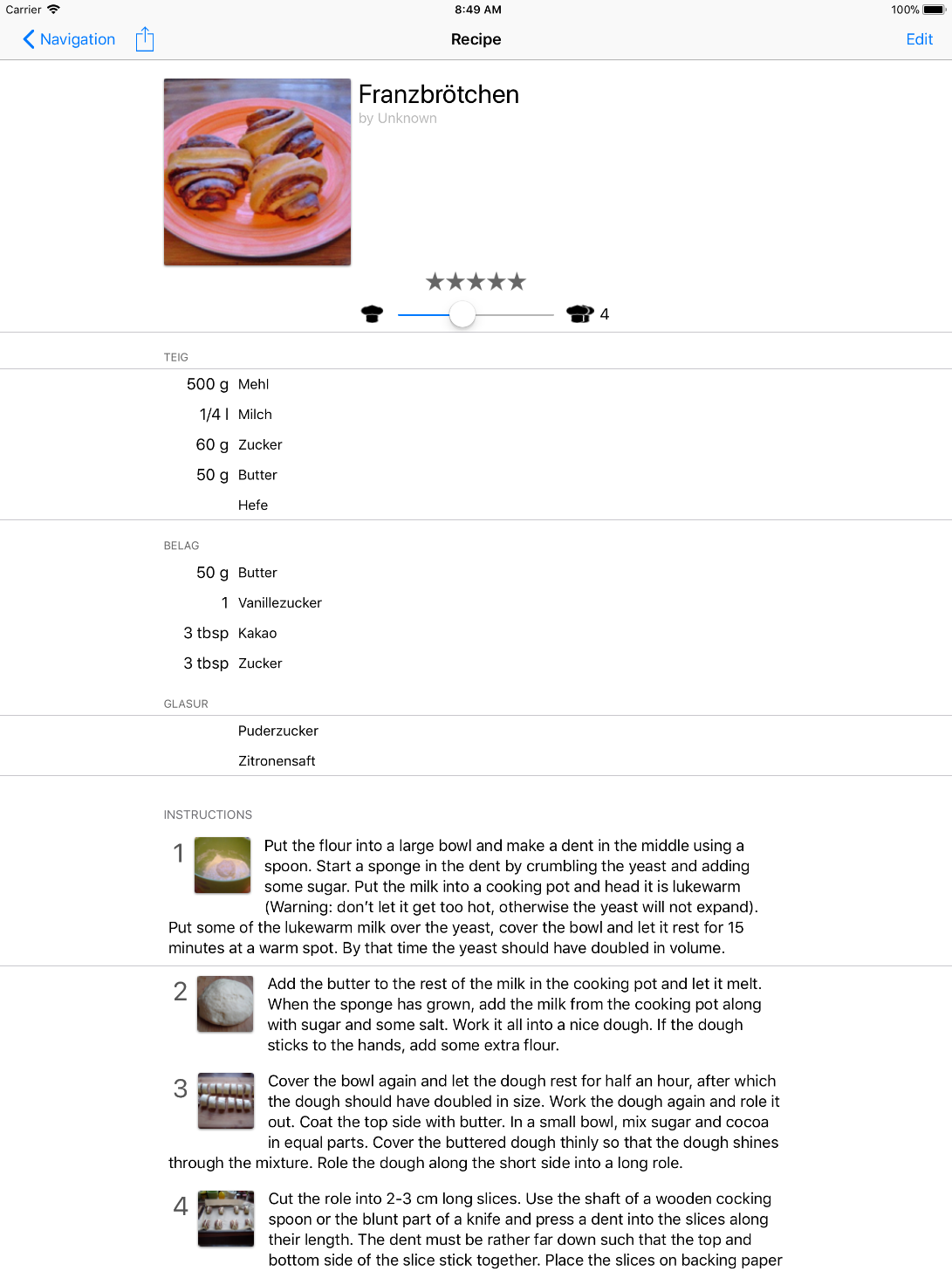

My Favorite Recipes Is Back in the AppStore

It’s back! After a massive overhaul a while ago, I finally managed to tie up all the loose ends and re-submit the app back to the AppStore. The app first hit the AppStore almost 10 years ago!

That meant porting it from what basically was iOS 3.1 code to iOS 12, switching to Storyboard-based interface, creating an iPad version, supporting Dynamic Type (resizable fonts) and many, many more thing that iOS users today simply expect. It’s still not the most flashy recipe app, but darn it if it isn’t the one with the best user interaction : )

Unfortunately, I haven’t managed to implement iCloud Syncing yet which would be very handy for keeping my iPhone and iPad in sync. But implementing this correctly will take some serious time and so I opted for rather getting the app out into the wild again and adding stuff like that later.

I also dropped the price to the lowest tier, will be interesting to see what effect that will have.

My Favorite Recipes – Moving from iOS 3 to 11

Due to popular demand, I’ve reactivated my recipe management app for iOS. It dropped out of the AppStore with the release of iOS 11 and the requirement that apps had to be 64bit only from that point on. Truth be told, the code had gotten a bit dated as the initial version was launched all the way back in September 2009. As a frame of reference, the first ever iOS SDK was launched in March of 2008! So we are talking the early days of iOS…

As a consequence, getting the app ready for iOS 11 would have taken a major rewrite and I didn’t have THAT MUCH time to spend on this project. But after a couple of users contacted me, I was curious: what does it take to bring an old iOS app up to speed with the latest in iOS technology? Also, I like my 12.9″ iPad Pro a lot and it always seemed a shame that recipes didn’t run on it!

This post is meant as a short review/history lesson in iOS development and it’s evolution through the ages. For more of an end-user perspective on the app itself, I’ll update the app’s page soon. But what I can tell you is: Boy, things have changed over they years! While the database schema and the data layer required hardly any adaption at all, pretty much every line of code in the user interface layer had to be changed. Unfortunately, for a recipe app the user interface is about 80% of the code!

Autolayout, Dynamic Type and Storyboards

The biggest advancement in iOS comes in the form of auto layouts and storyboards. Back in the days, life was simple: the iPad didn’t exist, all iPhones had the same screen resolution of 320×480 (no retina, no larger iPhone 5 aspect ratio, etc) and the general consensus was to avoid Interface Builder and rather do everything in code. Nowadays, we have iPhones and iPads of various physical sizes and resolutions, iPad apps can run in split screen with other apps and so on. So a) you have no idea at what resolution the user will run your app and b) even if you do, it can change at any moment (for example if the user starts another app side-by-side on iPad).

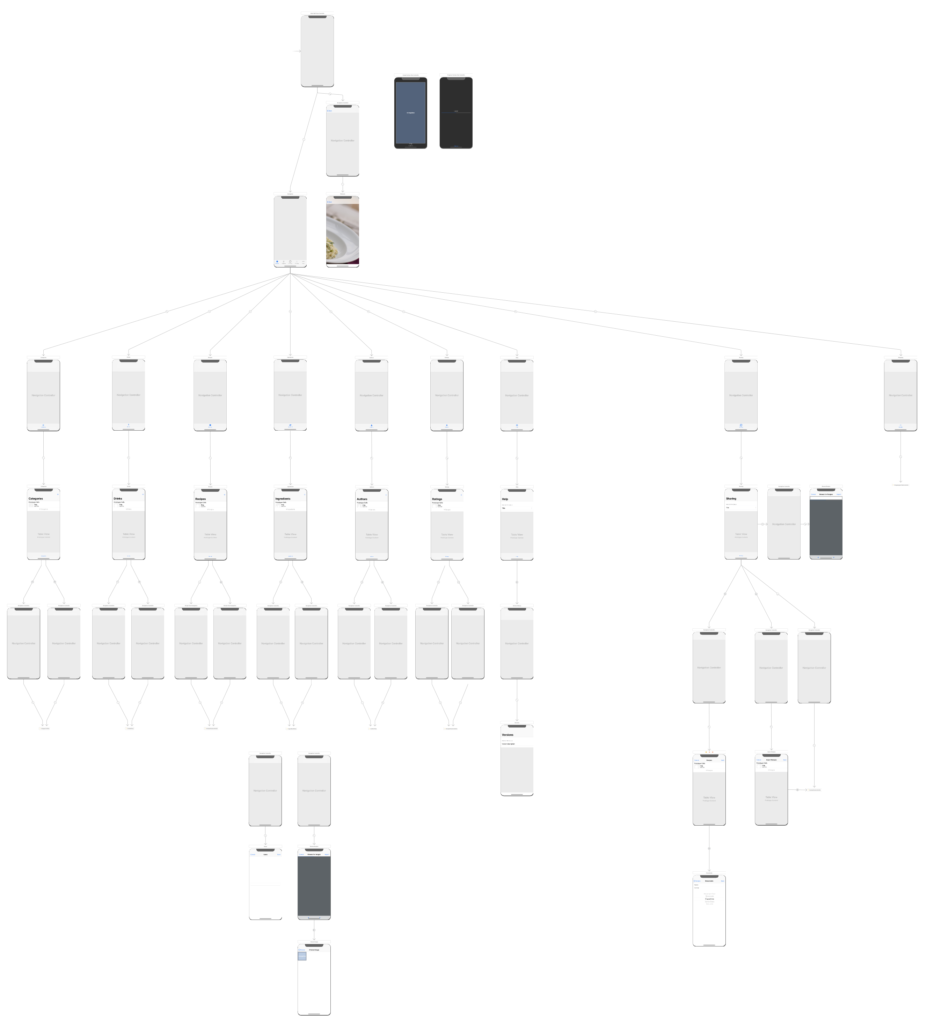

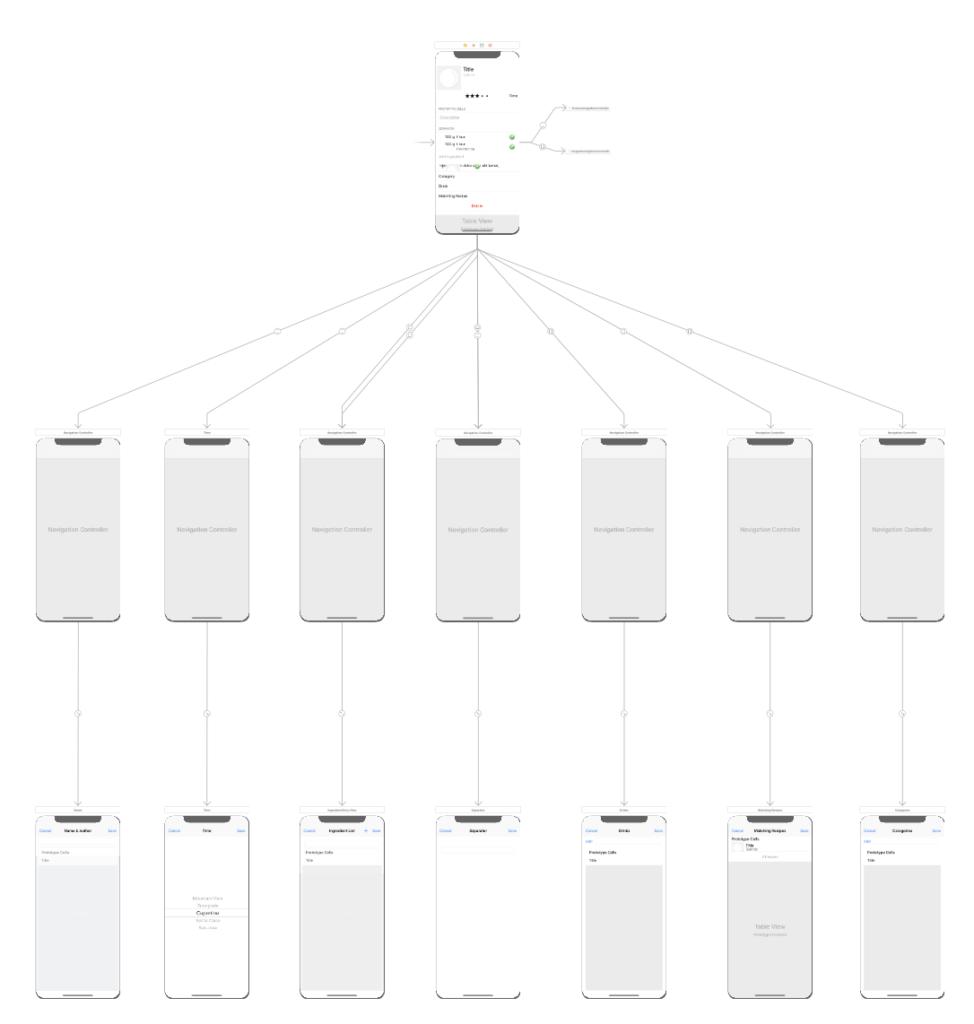

The only sensible way of handling this is to use Storyboards: They are a visual way of designing the UI and enforce splitting controls / layout from the controller classes that implement behaviour. The nice thing about Storyboards is that they allow connecting different pages of the app with transitions called “Segues” and thus represent the whole user flow of the app.

Microsoft tried to do a similar split with WPF/XAML/Expression Blend: Have one language/tool for the UI designer and another for the developer. Well, it didn’t work there because most designers cannot code and most developers cannot design. But when you try to implement a new feature, you need both aspects at the same time.

The reason why it seems to work here are:

- The design language of iOS is more restrictive and as long as you stick to it, “designing” a user interface amounts to placing controls and not worrying about pixel spacing. Even if a company decides to enforce their own CI, the framework and tools actively “encourage” the use of standard controls, gestures and animations. E.g. if you stick to the standard font definitions (“body”, “caption”, “heading”, …) instead of using custom font sizes/types, you get Dynamic Type support (see below) for free.

- Storyboards – even when only used as a developer-non-designer tool – can be used as a means of communication with a designer. The developer can do the basic layout and transitions and then show the Storyboard to the designer. It really gives you a nice overview of the user flow of the app.

- Clear view/controller-separation: In the Microsoft WPF world, people started to use weird XAML-constructs to put code into their UI-description that would have belonged into the controller. Since Interface Builder doesn’t give you options to do that, you end up with better code and increased likeliness of being able to reuse the same controller in different views of the application.

Unfortunately, Interface Builder gets slower the larger the Storyboards gets. So I had to split them into a main one and sub-Storyboards for the individual tab-contents (such as the main recipe, shopping list, sharing, etc). Even on my well speced 13″ MacBook Pro, loading the Storyboard below freezes XCode for 20-30 seconds!

In addition, Storyboards support variants such as changing individual font sizes or switching a horizontal to a vertical layout depending on the available screen space. One can even add/remove individual controls and it is all handled pretty much for free.

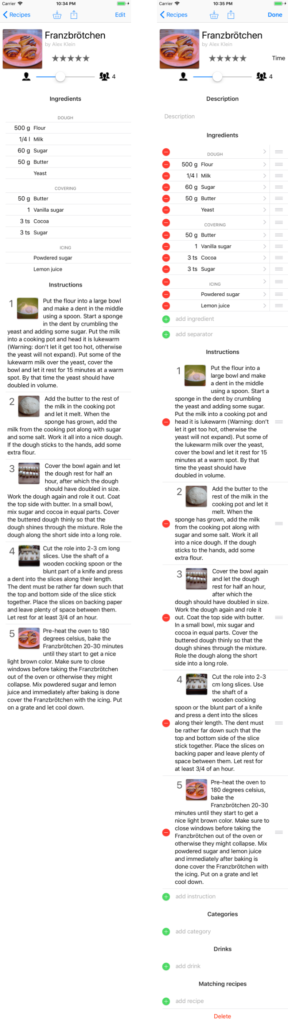

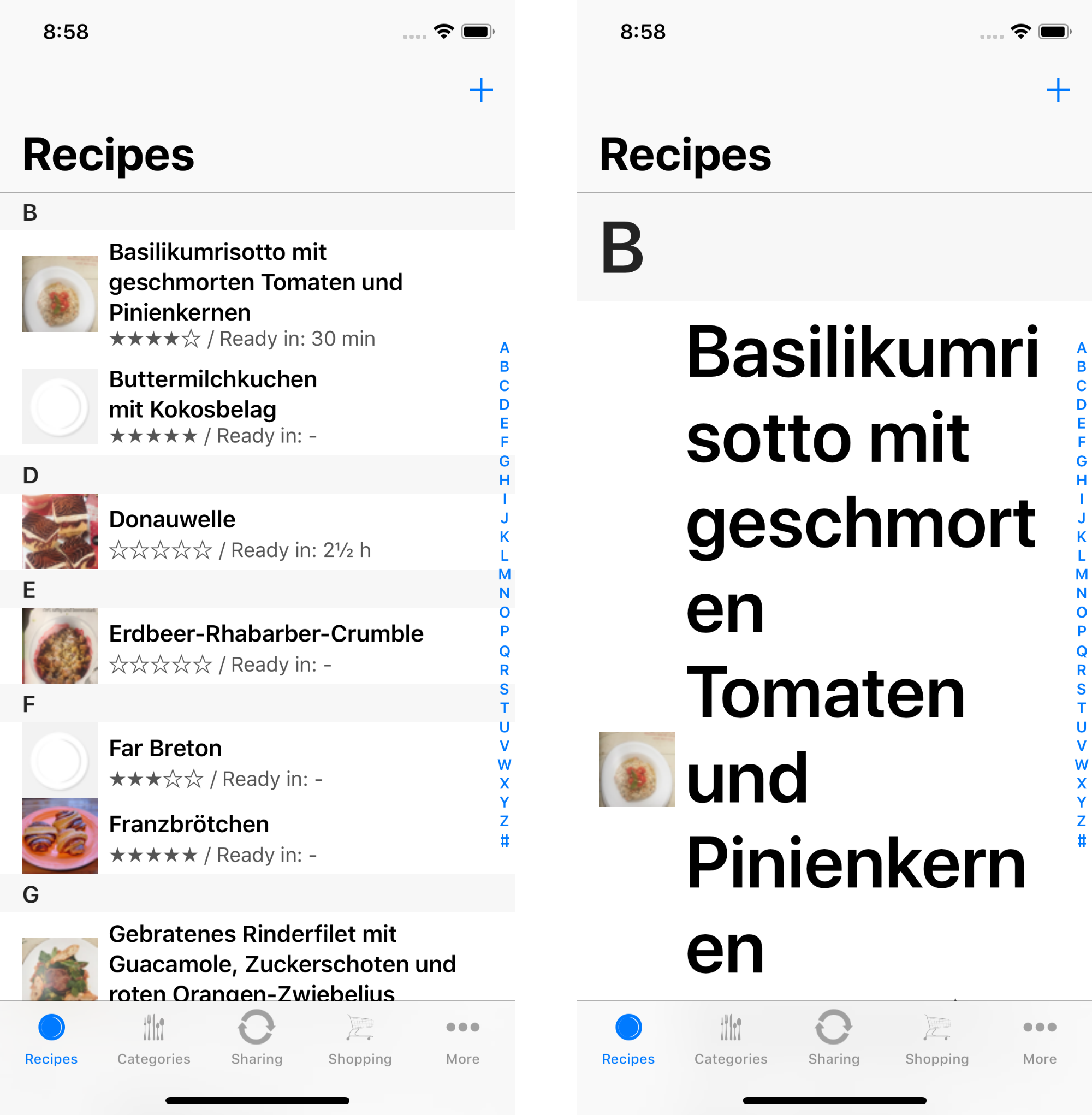

So yes, starting with Recipes 2.0 the app will finally support iPads as well! Note in the screenshot below that the layout automatically adapts to the huge iPad Pro 12″ screen size and increases the left and right margin. This is one of the many small things iOS does pretty much out of the box for you if you stick to using the system default layout margins.

Another recent addition to a the iOS world is Dynamic Type. What this means is that the user can increase (or decrease if your eyes are good enough) the font size in the system settings and – if your app supports it – the app changes the layout. The changes can be pretty dramatic, especially for the huge accessibility font sizes.

Dynamic Type is one of those features where you listen to the WWDC session, think “hey, that should be done in a few hours” and then it takes a couple of days. The first 90% are pretty easy and basically amounts to settings font sizes correctly, enabling multi-line UILabels, dynamic cell height, etc. Then comes the hard part:

- If you have any label that uses a custom font size/type (instead of the predefined “body”-, “caption”-, …- styles), Dynamic Type won’t scale the font. You have to write code to do that.

- If you want to use a different layout when the user switches to one of the huge accessibility sizes, you have to programatically remove the auto layout constraints and add new ones.

- If you use standard subtitle cells, the resizing does not seem to work correctly.

- …

It’s all doable. However, I kept having the feeling that Dynamic Type isn’t as “out of the box” as the WWDC session lets you believe. In the end it took quite a lot longer than I expected, but it’s a great feature to have.

As a bonus, this finally fulfils the user request to have multi-line cell labels to support very long recipe names!

iOS 11 Style

Compared to iOS 3, the style of iOS has changed a lot. So I had to redesign:

- the app icon (much flatter and simpler design)

- tab icons (still working on it)

- placeholder icons (still working on them as well)

- interaction mechanisms (such as export using the standard iOS share sheet instead of custom menus)

- control layout (users have different expectations where to find what nowadays)

- use flat buttons instead of old “glass button look”

- decide which interaction style dialogs on iPad should use (popups, modal dialogs, …)

- …

The actual coding for Recipes 2.0 is complete. However, I’m still struggling to find a consistent style for all the tab-icons, placeholder images and so forth. On the one hand, it seems idiotic to not release the app right away just because some icons look crappy. On the other hand, after spending so much time on redoing the layouts and improving the usability, it would be sad to loose potential users just because the icons look crappy.

Objective-C and ARC

Under the hood, things have also changed. Someone has asked me lately if they changed for the better or worse. I guess it depends. The world was easier back in the days: there was only one screen size, multitasking was limited, no device syncing, no dynamic type, … In general, user expectations have grown with the maturity of the platform. So while it has gotten way easier to write a basic app, all that improvement has been eaten up by having to support more features.

On the level of programming language, things have noticeably improved:

- Automatic Reference Counting (ARC) gets rid of all the memory management code

- Properties are auto-synthesized by default (the getter/setter are added by the compiler)

- Static and Runtime Analyzers help reduce the number of bugs

- Grand Central Dispatch (GDC) and blocks (=lambda expressions) make it easier to write multi-threaded coded. Using NSOperation or performSelector to dispatch code to a background thread had caused a pollution of the class scope because you needed a separate method to be called. Things got even worse because UI elements have to be updated from the main thread which caused – (void)doSomething, – (void)doSomethingOnThread and – (void)doSomethingAfterThread triples of methods.

- Using blocks instead of delegation: The new pattern seems to be to set functors (=blocks) instead of having to derive from a delegate-protocol. As with the previous point, this helps keeping code that belongs together in the same place.

- …

Misc Stuff

There is also lots of small stuff that has changed over the years. Here are just some of the more noteworthy things.

JSON-LD and the Structured Web

My Favorite Recipes was one of the first apps to use meta-information provided in HTML pages to extract and import recipes from websites. This was based on three formats (Microdata, Microformats and RDF) that the Google Recipes initiative had supported. At some point, they switched to JSON-LD which Recipes 2.0 now also supports. This is a nice, structured way to make web pages machine readable and way easier to implement than the older formats.

Unit Tests and UI Tests

I cannot remember if XCode actually offered unit test integration back in the days but nowadays it’s there. I currently use unit tests for the import/export code and UI tests for pretty much everything else. Recipes is such a UI-heavy application, there isn’t much code where non-UI unit testing makes sense.

As on other platforms, UI testing works by using an apps Accessibility Support to identify individual controls. So as long as you set proper accessibility labels (which you should anyway to support blind users), things are ready to go. The record functionality in XCode seems great at first as it creates test code while you run the app in the simulator and tap on the various controls. However, I’ve found that it often doesn’t work or produce crappy code, so I just use it as a quick way to identify controls and then rewrite the test code manually.

Unified Logging

It’s a small thing, but it is welcome. Apple has introduced a unified logging system (unified in the sense that it behaves the same on all of their platforms) which replaces NSLog. It’s pretty easy to use and allows grouping log messages into sub-categories which is nice.

Files App

Back in the days, one of the most common user questions was “how do I get my recipe files into the app”. The old upload-via-iTunes mechanism still exists but by using the standard file browser, there is now a nice, unified interface for it. And if you use iCloud-Drive, it’s part of the same dialog and makes moving files from your desktop to the app even easier.

Browser

The new WebKit-view makes it easier to have a fully functional embedded browser. Over the years, websites have changed and much of the content you see on a page is actually loaded via java script and not part of the original HTML page. The new browser control makes it possible to grab the web content as it is rendered and thus produces way more reliable results when trying search websites for the recipe information contained in them.

Share/Action Sheets

Those simply didn’t exist back in the days! Now, a single button in the recipe view allows sharing a recipe, adding it as a note, putting it on the integrated shopping list and more.

Summary

Things have improved a lot over the years. Complexity no longer comes from the language or shortcomings/bugs in the iOS frameworks. It rather depends on what kind of feature/usability level you want to achieve. iOS 11 has a lot of features that aren’t strictly necessary but are kind of expected at this point in time.

I’m wondering what the role of platform-independent UI frameworks or HTML5/JavaScript-UIs are in iOS development. For me, the number of small details (dynamic type, readable content margins on large iPads, …) or options of deep system integration (files dialog, share sheet, …) I have found during this project are so large that I wonder what kind of user experience a framework that tries to unify Android and iOS can even provide. My guess is as long as you “just need an app” they are fine but for a great user experience you simply need to develop a native UI.

My Favorite Recipes has always been trying to behave as much as possible like one of the built in apps. This meant a lot of work adapting to all the new capability of iOS 11 (and I still haven’t had the time to implement iCloud syncing). But finally having an iPad version feels great and using Storyboards has helped to improve the user experience a lot.

Hope you like the new 2.0 version of the app when it comes out!

Website redesign

As you probably have noticed, this website has changed a lot. I’ve moved away from hand crafted HTML + Tumbler blog to using WordPress now. This allows for a better structure, improved design and should make it easier to post content in the future. It’s also a good occasion for a general status update:

- Recipes: My Favorite Recipes automatically dropped out of the AppStore with the introduction of iOS 11 which requires 64bit. As you may or may not know, the app has been around for a long time. To be precise, it had it’s origins in the iOS 2 area which was the first iOS version one could develop apps for! During almost decade, a lot of things change and the way apps are build today is completely different then back then. Things like multi-tasking, storyboards or auto-layout UIs did not exist. So it isn’t easy to bring such an old app up to iOS 11 but I’ve started the process – which is the main reason for this website revamp. I’ll post more on this soon…

- I’ve converted all the old blog entries but split all content concerning my 3D Modeler project to a separate site. It can now be found at https://metashapes.com. This has been my primary focus over the last couple of years which is also the reason why there was little activity on this site.

- Streetsoccer has been removed from the AppStore for a while now. While I still get occasional requests to bring it back, the license agreement I had with Cwali (the publisher of the original boardgames) has expired and so unfortunately there is no chance to get it up and running again.

StreetSoccer V1.1.0 – German Localization and Movement Area

StreetSoccer V1.1.0 is now heading for Apple review. This again brings a bunch of bugfixes as well as two new features:

- German Localization

- Movement Area Visualization